Industry observers agree on one thing: the year 2026 marks a shift in the very nature of cyberattacks. Artificial intelligence no longer simply assists attackers, it now automates the entire intrusion cycle. At the same time, ransomware continues to evolve towards ever more sophisticated extortion models, while critical infrastructures are becoming priority targets. A cross-analysis of the predictions made by the main cybersecurity players reveals the trends that will shape the next twelve months.

Table des matières

ToggleHow AI is transforming cybercriminals’ methods

Malware that learns and adapts in real time

New generations of ransomware incorporate learning capabilities that enable them to analyze the environments they infiltrate and adjust their behavior accordingly. Dmitry Volkov, CEO of Group-IB, anticipates the emergence of a new generation of malware capable of autonomous propagation, similar to historical computer worms such as WannaCry or NotPetya, but with unprecedented sophistication. These new variants probe their targets, select systems to compromise and adjust their evasion techniques according to the defenses encountered in order to evade traditional detection.

The integration of increasingly powerful AI will enable these malware to automate the entire kill chain: security vulnerability discovery, exploitation, lateral movement and coordination of large-scale operations. This automation drastically reduces the time available to defense teams, who must now react at the speed of algorithms rather than human operators.

Mass phishing attacks

The industrialization of phishing attacks has reached a new level. No longer requiring manual operations, artificial intelligence systems generate ultra-personalized phishing campaigns by exploiting the public profiles, exchange history and professional network of their targets. This mass personalization, coupled with the production of thousands of variants in a matter of seconds, makes detection by traditional filtering increasingly uncertain.

However, according to Bitdefender, the danger lies not only in the offensive use of AI. Thehaphazard adoption of AI tools by employees creates a considerable attack surface that organizations struggle to control. Employees sometimes use unauthorized AI services, share sensitive data with third-party platforms and circumvent established security policies, often without measuring the consequences. This flawed governance of in-house AI represents a major vulnerability, as it exposes the company to data leaks and confidentiality breaches without any external intrusion.

These enterprise AI systems also present intrinsic vulnerabilities. Prompt injection attacks can manipulate the behavior of AI assistants by injecting malicious instructions into the documents or data they process, turning legitimate tools into attack vectors without the user noticing.

Social engineering reaches a new level

AI makes the usual warning signs invisible

One of the most worrying developments is the refinement of AI-assisted social engineering. The grammatical errors, awkward wording and translation mistakes that once betrayed phishing attempts are now a thing of the past. Language models (LLMs) generate perfectly worded, contextually appropriate and psychologically manipulative communications, making it increasingly difficult to distinguish between legitimate messages and attempted scams.

Voice cloning and deepfakes, formidable weapons

Today, voice cloning represents one of the most profitable threats for attackers. With just a few seconds of audio recording, easily accessible via social networks or institutional sites, cybercriminals can reproduce an executive’s voice with uncanny fidelity. This technique is particularly effective for vishing (voice phishing) scams, targeting bank transfer validation processes and authorizations for sensitive transactions.

Video deepfakes are also gaining in quality, although their production costs remain higher. However, according to Bitdefender, these techniques are reserved for attacks aimed at targets with high profit potential.

Techniques that abuse users’ trust gain ground

Alongside these technological innovations, simple but effective techniques such as ClickFix continue to bypass technical defenses by abusing users’ trust in familiar interfaces. These attacks are based on believable scenarios that induce victims to voluntarily execute malicious code, thinking they are solving a legitimate technical problem. By presenting bogus system error messages or fake update notifications, attackers exploit Internet users’ reflexes, turning them into unwitting accomplices in their own infections.

Ransomware automates its operations

A criminal ecosystem structured like a business

Ransomware-as-a-Service (RaaS) functions as a truly organized business model, bringing together developers, affiliates and tool providers in a nebulous but structured ecosystem. All these players work according to a logic of efficiency: “maximize the impact and minimize the time and effort required for disruption“, as Dmitry Volkov writes. Groups such as DragonForce already embody this industrialization with their white-label model that democratizes access to sophisticated attacks.

AI lowers the barrier to entry for affiliates

AI agents are gradually being integrated into RaaS offerings, making advanced automated capabilities accessible even to low-skilled affiliates. Once an intrusion has been established in their prey’s network, these agents take care of internal recognition, privilege elevation and encryption preparation without requiring in-depth technical expertise.

Current automation already covers rapid encryption of virtualized infrastructures, automatic destruction of backups and deactivation of defense solutions. The integration of AI amplifies this dynamic by enabling criminal groups to carry out several simultaneous operations with a minimum of human resources, thus expanding the pool of actors capable of conducting large-scale campaigns.

Extortion becomes more diversified and sophisticated

From simple encryption to multi-faceted strategies

The encryption-only extortion model is losing ground to approaches that combine data theft, the threat of publication, selective encryption and sometimes denial-of-service (DDoS ) attacks. This hybrid approach multiplies the pressure exerted on victims by simultaneously exploiting several vulnerabilities.

The encryption-only extortion model is in decline. Double extortion, combining encryption and data theft with publication threats, has paved the way for even more sophisticated strategies: selective encryption, massive exfiltration and sometimes denial-of-service attacks(DDoS). This hybrid approach multiplies the pressure exerted on victims by simultaneously exploiting several vulnerabilities.

Cybercriminals are taking this logic one step further. At Resilience, Tom Egglestone observes that groups no longer target a single organization, but compromise the company, its subsidiaries, its suppliers and sometimes its customers at the same time. This mesh of cross-pressures accelerates reputational and operational damage, making the victim’s position untenable while significantly increasing the likelihood of payment.

Extortion without encryption gains ground

Dr Ann Irvine, Chief Data and Analytics Officer at Resilience, sees a trend emerging: malicious actors are increasingly forgoing encryption in favor of demanding money directly in exchange for non-disclosure of stolen data. This changes the nature of the threat. Traditional recovery strategies, notably backups, become ineffective in the face of the risk of public exposure of sensitive information such as customer data, industrial secrets or confidential communications.

The supply chain, the Achilles heel of organizations

The supply chain is becoming a major attack vector. Attackers have understood this: compromising a supplier with privileged access to multiple customers represents a far more profitable investment than targeting each organization individually. A single intrusion at one provider can spread to dozens of corporate customers. Shared infrastructures (cloud, SaaS, managed services) are increasingly attracting the attention of attackers.

Bribing an employee to bypass defenses

Recorded Future reports an increase in attempts by ransomware groups to recruit employees. The latter offer money to employees in exchange for identifiers or sensitive information. A BBC journalist, for example, was approached directly by cybercriminals. This approach completely bypasses technical defenses by exploiting human vulnerability.

AiTM attacks automated by AI

When multi-factor authentication is no longer enough

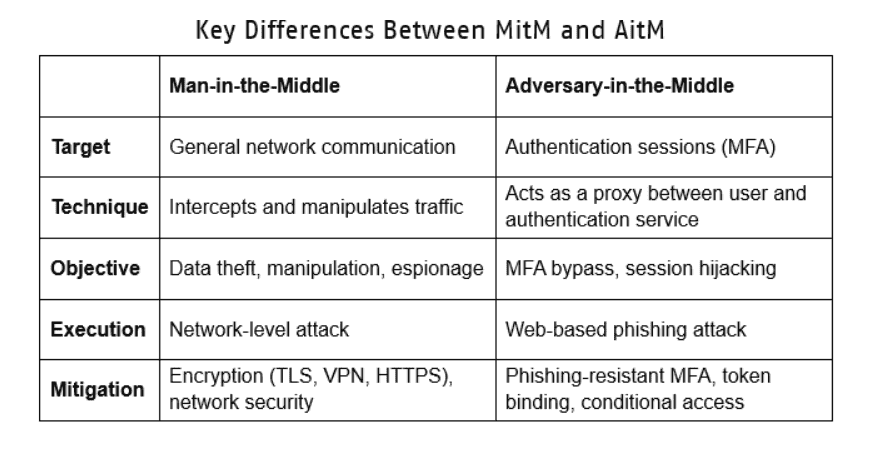

Adversary-in-the-Middle (AiTM) attacks are gaining in popularity with cybercriminals. These attacks not only steal credentials, but also intercept and exploit already authenticated sessions. When a user legitimately logs on to a service protected by multi-factor authentication, the attacker captures the valid session token and uses it to access systems without ever needing the password or second factor. In January 2026, Microsoft documented a multi-stage AiTM campaign targeting the energy sector, where a single compromised account was used to send over 600 phishing e-mails via SharePoint.

Currently, these AiTM operations require significant manual management to maintain the persistence of compromised sessions and bypass authentication mechanisms. But the integration of AI into these frameworks is changing the game. By 2026, according to Group-IB, attackers will be integrating AI to automate session hijacking and credential collection on a massive scale. The verification systems we use today – two-factor authentication, biometrics, passwordless systems – will become ineffective in the face of AI-driven AiTM operations, which are more adaptive than current defenses. Only phishing-resistant authentication methods such as FIDO2/WebAuthn, which link authentication to the device via public key cryptography, can withstand AiTM attacks, as no identifiers or tokens are transmitted over the network.

Cloud APIs: a massive new attack surface

When cloud automation becomes a vulnerability

Modern businesses rely heavily on cloud ecosystems and APIs to unlock growth and efficiency. APIs, designed for automation, enable operations to be orchestrated on an unprecedented scale thanks to their machine-controlled interfaces. But this efficiency comes with a downside: AI-driven threats can also exploit these same interfaces. By 2025, Palo Alto Networks has seen a 41% increase in attacks targeting APIs, a trend directly linked to the explosion of agentic AI that massively relies on these interfaces.

Cloud environments are controlled by code. Permissions, storage, network settings and security policies are defined via APIs, making these configurations machine-readable. Artificial intelligence can therefore understand and even modify cloud configurations by exploiting these APIs. Attackers will target this automated layer to cause massive disruption, expose control surfaces or alter critical configurations. Gartner, the world leader in technology and cybersecurity research, even predicts that by 2026, 80% of data breaches will involve insecure APIs, making this automation layer the most critical weak link in cloud infrastructures.

This dynamic creates a new battlefield, where APIs give both sides – attackers and defenders – the ability to multiply their operations in the cloud indefinitely. The advantage will go to whoever has the computing power and resources to exploit this scalability.

OAuth attacks: the domino effect between cloud applications

Interconnected SaaS environments create a network of trust between applications: Microsoft 365, Google Workspace, Slack and Salesforce exchange authorizations via OAuth. OAuth compromises exploit precisely this network of trust. An attacker who tricks a user into granting permissions to a malicious application obtains a legitimate anchor point. This compromised application can then propagate laterally between services by exploiting existing trust relationships, without requiring passwords or multi-factor authentication.

This threat took on a concrete dimension in August 2025 with the compromise of the Salesloft Drift application. Attackers exploited stolen OAuth tokens to access hundreds of Salesforce instances and Google Workspace accounts, demonstrating how a single compromised application can contaminate an entire enterprise SaaS ecosystem in a matter of hours.

Invisible backdoors in AI-assisted code

Artificial intelligence is transforming software development practices. Coding assistants are dramatically speeding up production, but the growing reliance on AI-generated code is reducing the rigor of reviews. Many teams now allow code to reach production environments more quickly, neglecting in-depth checks that would have detected anomalies.

This development increases the risk of supply chain attacks. Adversaries insert hard-to-detect backdoors into legitimate software and popular libraries used by thousands of developers. With the rise of AI-assisted coding tools, state actors could attempt to influence or manipulate these systems to integrate backdoors and vulnerabilities on a large scale.

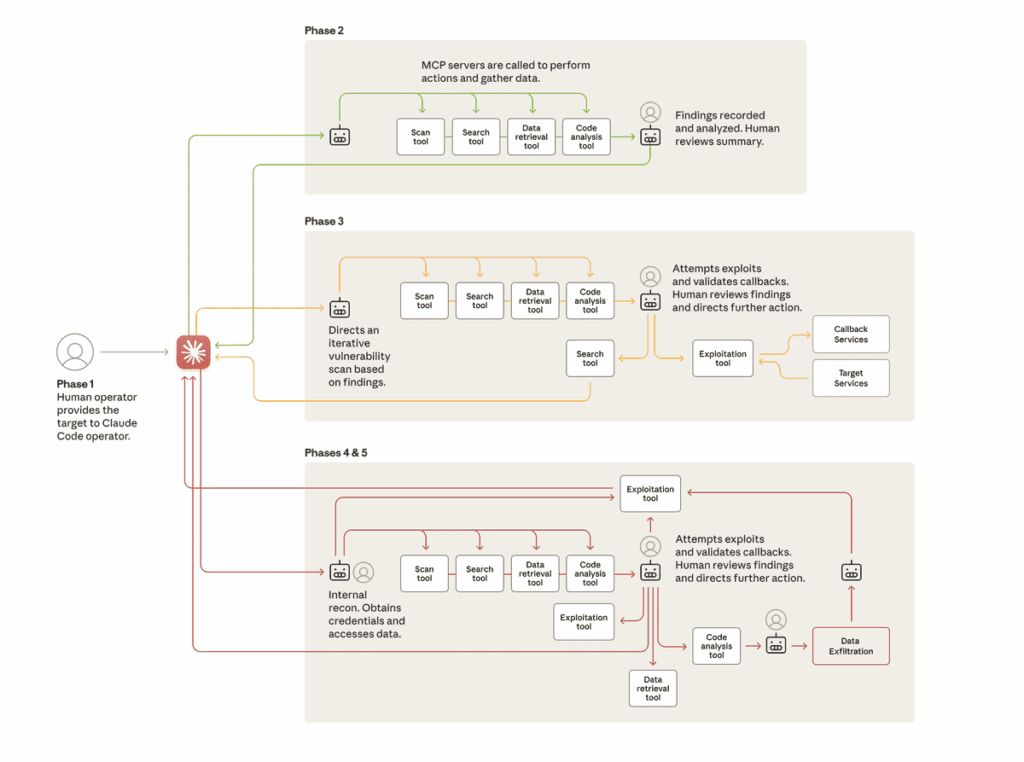

Group-IB points out that this combination of widespread adoption and reduced scrutiny represents an evolving opportunity for adversaries to compromise systems and development pipelines, an opportunity they are unlikely to overlook. This prediction materialized dramatically in September 2025, when Anthropic detected and neutralized the first large-scale AI-orchestrated cyberattack. A malicious actor had manipulated Claude Code to infiltrate some thirty global organizations almost autonomously, demonstrating that the threat of AI-assisted backdoors is not just theoretical.

Organizing the defensive response

Unified SOCs and cyber-fraud fusion centers

Faced with the acceleration of AI-driven attacks, Security Operations Centers are investing heavily in intelligent automation to detect and respond to threats at competitive speed. This evolution requires near real-time internal collaboration to close security gaps.

Traditional SOCs, organized around disparate tools generating huge volumes of alerts, create response silos. This model is gradually giving way to Cyber Fraud Fusion Centers. These hybrid, cross-functional teams dramatically improve risk management thanks to shared data, dashboards offering connected visibility, centralized automated models to standardize and correlate information, and real-time feedback loops enabling instant action at the first sign of a threat.

Artificial intelligence plays a central role in automating the triage and enrichment of alerts, enabling analysts to concentrate on complex investigations requiring judgment and creativity. The keywords for this transformation: continuous, real-time, collaborative.

AI transparency, a trust imperative

AI systems will increasingly make autonomous security decisions. But lack of supervision can become detrimental, leading to bias, misclassification and ethical failures. AI transparency and auditability, what English-speakers call “Explainable AI” (XAI), will move from being an option to a non-negotiable imperative for organizations seriously deploying AI capabilities in security.

This transparency must apply to training data to prevent poisoning, to decision logic to avoid exploitable biases, and to the results validation process so that analysts understand why the model acted in a certain way. Despite companies’ eagerness to integrate AI, most are not ready to fully trust it. The black box problem, additional vulnerabilities and compliance risks have made rapid AI adoption a major risk.

Dmitry Volkov points out that: this shifts the central security question from “Where do you use AI?” to “What do you let AI decide, and can you explain it?”.

Tomorrow’s challenges

The year 2026 will not mark an abrupt break, but the acceleration of trends already at work. Artificial intelligence amplifies the capabilities of attackers and defenders alike, creating a race in which automation becomes indispensable. Ransomware continues to professionalize, methodically exploiting weak links to maximize profitability. Digital infrastructures are under increasing pressure, reflecting their strategic importance.

Successful organizations will be those that abandon the paradigm of absolute prevention and adopt a posture of resilience. Rather than seeking to prevent all intrusions, they prepare themselves to be compromised, and build their capacity to rapidly detect, effectively contain and fully restore their operations. This approach involves truly isolated backups, regularly tested continuity plans and a clear understanding of critical dependencies.

Controlling third-party risk requires a radical change: continuous monitoring of suppliers’ security posture, precise mapping of potential impacts, and contingency plans to maintain operations even in the event of a major incident at a supplier. Precise quantification of exposure goes beyond superficial compliance assessments to include real operational losses, erosion of customer confidence and regulatory consequences.

Finally, the fundamentals remain as relevant as ever: rigorous multi-factor authentication, network segmentation, systematic patching and ongoing training. Advanced technologies cannot compensate for weak foundations. Only organizations that have established a solid security hygiene will be able to take full advantage of the defensive capabilities offered by AI. Risks are not hypothetical scenarios. They are hitting organizations today that were unprepared for this new normal of cyber threat.

Sources

Group-IB: Stranger Threats Are Coming: Group-IB Cyber Predictions for 2026 and Beyond

Lumu: Cybersecurity Predictions 2026: The Post-Malware & AI Era

Bitdefender: Cybersecurity Predictions 2026: Hype vs. Reality

Cyber Resilience: Cybersecurity and Insurance Predictions 2026

Recorded Future: Ransomware Tactics 2026

BBC News: Ransomware gang tries to hire journalist as insider

SecurityWeek: Cyber Insights 2026: API Security – Harder to Secure, Impossible to Ignore

Microsoft Security Blog: Multistage AiTM phishing and BEC campaign abusing SharePoint

MITRE ATT&CK®: Adversary-in-the-Middle

Swissbit: Bypassing MFA: The Rise of Adversary-in-the-Middle (AitM) Attacks

Palo Alto Networks: Palo Alto Networks Report Reveals AI Is Driving a Massive Cloud Attack Surface Expansion

Wowrack: Preparing for 2026 Cloud Threats: A Guide to Cloud Security Trends and Risk Management

Google Cloud Blog: Widespread Data Theft Targets Salesforce Instances via Salesloft Drift

Anthropic: Disrupting the first reported AI-orchestrated cyber espionage campaign

Google Cloud : Cybersecurity Forecast 2026 report